Bridging Knowledge Across Worlds: Transfer Learning and Domain Adaptation

Imagine a skilled violinist who decides to learn the cello. Although the instrument is different, the musician does not start from zero. Their sense of rhythm, finger dexterity, and understanding of musical scales transfer naturally, allowing them to adapt more quickly than someone without musical experience. Transfer learning in machine learning works in a similar way. Instead of training a model from scratch for every new problem, we allow it to carry forward knowledge from previous learning experiences. The goal is to use what has already been learned to accelerate learning in a new, related task.

This approach has become essential in a world flooded with data. It saves time, computation, and improves performance when labelled data for the new task is limited.

The Source and the Target: Passing Knowledge Forward

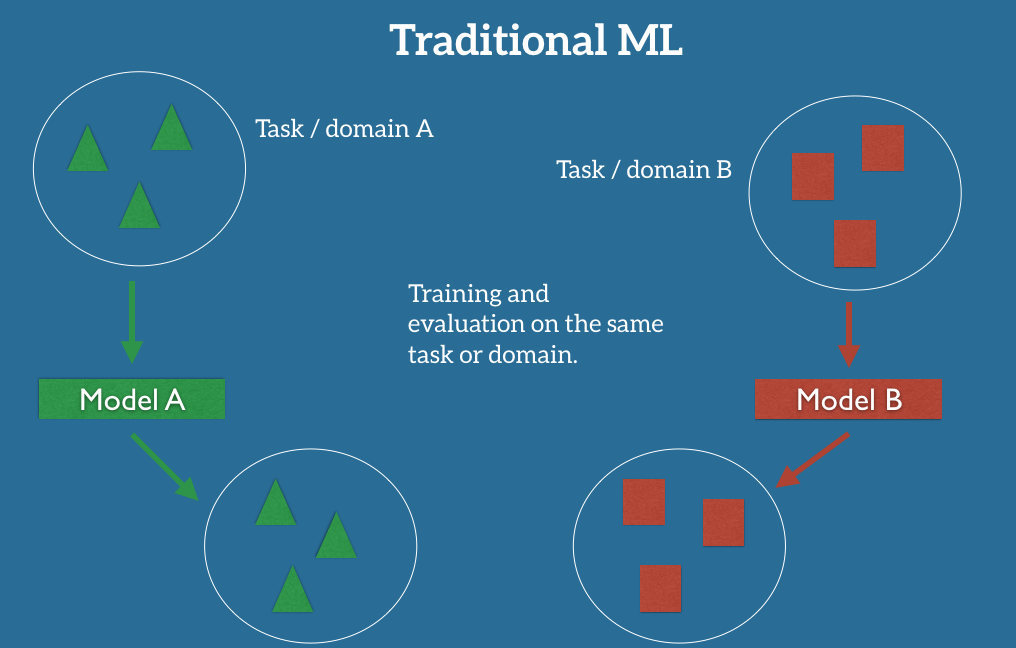

At the heart of transfer learning is the relationship between a source task and a target task. The model learns patterns in the source task first, like recognising shapes, edges, colours, or semantic patterns in language. Once these foundational patterns are learned, they can be adapted to tasks that share similarities.

For example:

- A model trained to recognise cars may also be useful in detecting trucks.

- A language model that learns sentence patterns in news articles can adapt to customer email conversations.

- A medical image classification model trained on one type of scan can adapt to another scan format.

Learners who study applied machine learning in structured environments, such as those pursuing an AI course in Mumbai, often encounter transfer learning early on because it mirrors how humans learn: by building on past experiences rather than beginning from zero each time.

Feature Extraction: Using What the Model Already Knows

Feature extraction is the simplest form of transfer learning. In this strategy, we take a model that is already trained and reuse its internal representation of data. Deep neural networks, for example, learn multiple layers of features, starting from basic shapes in early layers to more abstract concepts in deeper layers.

In image tasks:

- Early layers detect lines and texture.

- Middle layers detect patterns like eyes or wheels.

- Later layers combine these into full shapes and objects.

Instead of retraining the model, we simply remove the final classifier and attach a new one tailored to the new task. The heavy lifting has already been done. The new learning phase becomes shorter, lighter, and more efficient.

This approach works beautifully when the source and target tasks share structural similarities, even if they are not identical.

Domain Adaptation: When Worlds Look Similar But Behave Differently

Domain adaptation becomes crucial when the source and target data come from different environments. For example, a model trained on clear professional photos may struggle when faced with blurry phone images. The structure of the problem is the same, but the context differs.

Domain adaptation techniques help models adjust by:

- Aligning statistical distributions of the datasets

- Reweighting features to highlight patterns that matter in the new domain

- Fine-tuning model layers to reshape learned representations

A powerful example appears in speech recognition. A model trained on adult voices may struggle with children’s speech until adapted. The acoustic patterns differ, but the underlying structure of language remains constant.

This process requires careful calibration. Adapt too little, and the model remains rigid. Adapt too much, and the model forgets the foundational knowledge it previously gained.

Fine-Tuning: The Balance Between Old Knowledge and New Insight

Fine-tuning is a process where the pretrained model is partially retrained on new data. Instead of locking all learned features, some layers are adjusted gradually. This allows the model to maintain a general understanding while becoming more specialised.

However, fine-tuning requires caution. If learning is too aggressive, the model may “forget” what it learned before, a problem known as catastrophic forgetting. If learning is too gentle, the model may fail to adapt adequately. Skilful fine-tuning is like tuning an instrument: adjustments must be precise and intentional.

Professionals who practice these techniques often benefit from guided expertise, which is why structured environments such as an AI course in Mumbai emphasise hands-on experimentation with pretrained models, transfer mechanisms, and evaluation strategies.

Conclusion

Transfer learning reflects a deeply human way of learning. We do not restart every time we learn something new. We borrow, adapt, refine, and repurpose knowledge. Machine learning systems, when designed to transfer knowledge this way, become more efficient, more flexible, and more intelligent.

Feature extraction provides a strong foundation. Domain adaptation ensures relevance in new environments. Fine-tuning blends old insight with new requirements. Together, they create systems capable of learning quickly and performing strongly even when data is limited.

In a world where efficiency and adaptability matter, transfer learning stands as a powerful pillar, helping machines learn not just faster but also smarter.